Hive is a data warehouse software built on top of Hadoop that facilitates the management of large datasets, there are some important features to know:

- It has tools to access data via SQL commands

- It allows access to files stored in Apache HDFS or HBase

- The query execution could be integrated with Apache Spark or Apache Tez

- Hive’s SQL could be extended via User Defined Functions (UDFs), User Defined Aggregates (UDAFs) and User Defined Table functions (UDTFs)

- Hive is designed for data warehousing tasks and not for online transaction processing workloads

- Hive is designed to scale horizontally using the Hadoop cluster, then it could maximize scalability

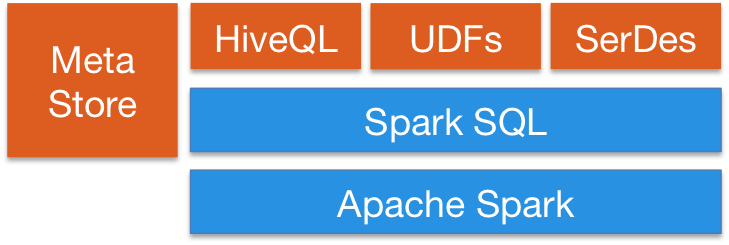

Now that we have an overview / understanding about Hive, and if we need to execute queries for Big Data, an excellent integration we can do is with Spark SQL, since it supports HiveQL syntax, Hive UDFs and Hive SerDes allowing us to access existing Hive warehouses and gain all the amazing features of the Apache Spark like cost-based optimizer, code generation for queries, scalability and multi hour queries using the Spark engine (for fault tolerance).

import java.io.File

import org.apache.spark.sql.{Row, SaveMode, SparkSession}

case class Record(key: Int, value: String)

val warehouseLocation = new File("spark-warehouse-dir").getAbsolutePath

val spark = SparkSession

.builder()

.appName("Spark Hive Test")

.config("spark.sql.warehouse.dir", warehouseLocation)

.enableHiveSupport()

.getOrCreate()

import spark.implicits._

import spark.sql

sql("SELECT * FROM hive_table").show()

I didn’t test an integration with Apache Tez yet, but it seems so powerful processing Directed Acyclic Graphs (DAGs) and with similar performance as Apache Spark. An interesting comparison about it could be found in https://www.integrate.io/blog/apache-spark-vs-tez-comparison/

References

https://cwiki.apache.org/confluence/display/Hive/Home

https://spark.apache.org/docs/latest/sql-data-sources-hive-tables.html